Singapore Trip

In December 2024, I went on a trip through four countries - Singapore, Malaysia, Brunei, and Vietnam - with my friend Badri. This post covers our experiences in Singapore.

I took an IndiGo flight from Delhi to Singapore, with a layover in Chennai. At the Chennai airport, I was joined by Badri. We had an early morning flight from Chennai that would land in Singapore in the afternoon. Within 48 hours of our scheduled arrival in Singapore, we submitted an arrival card online. At immigration, we simply needed to scan our passports at the gates, which opened automatically to let us through, and then give our address to an official nearby. The process was quick and smooth, but it unfortunately meant that we didn’t get our passports stamped by Singapore.

Before I left the airport, I wanted to visit the nature-themed park with a fountain I saw in pictures online. It is called Jewel Changi, and it took quite some walking to get there. After reaching the park, we saw a fountain that could be seen from all the levels. We roamed around for a couple of hours, then proceeded to the airport metro station to get to our hotel.

A shot of Jewel Changi.

There were four ATMs on the way to the metro station, but none of them provided us with any cash. This was the first country (outside India, of course!) where my card didn’t work at ATMs.

To use the metro, one can tap the EZ-Link card or bank cards at the AFC gates to get in. You cannot buy tickets using cash. Before boarding the metro, I used my credit card to get Badri an EZ-Link card from a vending machine. It was 10 Singapore dollars (₹630) - 5 for the card, and 5 for the balance. I had planned to use my Visa credit card to pay for my own fare. I was relieved to see that my card worked, and I passed through the AFC gates.

We had booked our stay at a hostel named Campbell’s Inn, which was the cheapest we could find in Singapore. It was ₹1500 per night for dorm beds. The hostel was located in Little India. While Little India has an eponymous metro station, the one closest to our hostel was Rochor.

On the way to the hostel, we found out that our booking had been canceled.

We had booked from the Hostelworld website, opting to pay the deposit in advance and to pay the balance amount in person upon reaching. However, Hostelworld still tried to charge Badri’s card again before our arrival. When the unauthorized charge failed, they sent an automatic message saying “we tried to charge” and to contact them soon to avoid cancellation, which we couldn’t do as we were in the plane.

Despite this, we went to the hostel to check the status of our booking.

The trip from the airport to Rochor required a couple of transfers. It was 2 Singapore dollars (approx. ₹130) and took approximately an hour.

Upon reaching the hostel, we were informed that our booking had indeed been canceled, and were not given any reason for the cancelation. Furthermore, no beds were available at the hostel for us to book on the spot.

We decided to roam around and look for accommodation at other hostels in the area. Soon, we found a hostel by the name of Snooze Inn, which had two beds available. It was 36 Singapore dollars per person (around ₹2300) for a dormitory bed. Snooze Inn advertised supporting RuPay cards and UPI. Some other places in that area did the same. We paid using my card. We checked in and slept for a couple of hours after taking a shower.

By the time we woke up, it was dark. We met Praveen’s friend Sabeel to get my FLX1 phone. We also went to Mustafa Center nearby to exchange Indian rupees for Singapore dollars. Mustafa Center also had a shopping center with shops selling electronic items and souvenirs, among other things. When we were dropping off Sabeel at a bus stop, we discovered that the bus stops in Singapore had a digital board mentioning the bus routes for the stop and the number of minutes each bus was going to take.

In addition to an organized bus system, Singapore had good pedestrian infrastructure. There were traffic lights and zebra crossings for pedestrians to cross the roads. Unlike in Indian cities, rules were being followed. Cars would stop for pedestrians at unmanaged zebra crossings; pedestrians would in turn wait for their crossing signal to turn green before attempting to walk across. Therefore, walking in Singapore was easy.

Traffic rules were taken so seriously in Singapore I (as a pedestrian) was afraid of unintentionally breaking them, which could get me in trouble, as breaking rules is dealt with heavy fines in the country. For example, crossing roads without using a marked crossing (while being within 50 meters of it) - also known as jaywalking - is an offence in Singapore.

Moreover, the streets were litter-free, and cleanliness seemed like an obsession.

After exploring Mustafa Center, we went to a nearby 7-Eleven to top up Badri’s EZ-Link card. He gave 20 Singapore dollars for the recharge, which credited the card by 19.40 Singapore dollars (0.6 dollars being the recharge fee).

When I was planning this trip, I discovered that the World Chess Championship match was being held in Singapore. I seized the opportunity and bought a ticket in advance. The next day - the 5th of December - I went to watch the 9th game between Gukesh Dommaraju of India and Ding Liren of China. The venue was a hotel on Sentosa Island, and the ticket was 70 Singapore dollars, which was around ₹4000 at the time.

We checked out from our hostel in the morning, as we were planning to stay with Badri’s aunt that night. We had breakfast at a place in Little India. Then we took a couple of buses, followed by a walk to Sentosa Island. Paying the fare for the buses was similar to the metro - I tapped my credit card in the bus, while Badri tapped his EZ-Link card. We also had to tap it while getting off.

If you are tapping your credit card to use public transport in Singapore, keep in mind that the total amount of all the trips taken on a day is deducted at the end. This makes it hard to determine the cost of individual trips. For example, I could take a bus and get off after tapping my card, but I would have no way to determine how much this journey cost.

When you tap in, the maximum fare amount gets deducted. When you tap out, the balance amount gets refunded (if it’s a shorter journey than the maximum fare one). So, there is incentive for passengers not to get off without tapping out. Going by your card statement, it looks like all that happens virtually, and only one statement comes in at the end. Maybe this combining only happens for international cards.

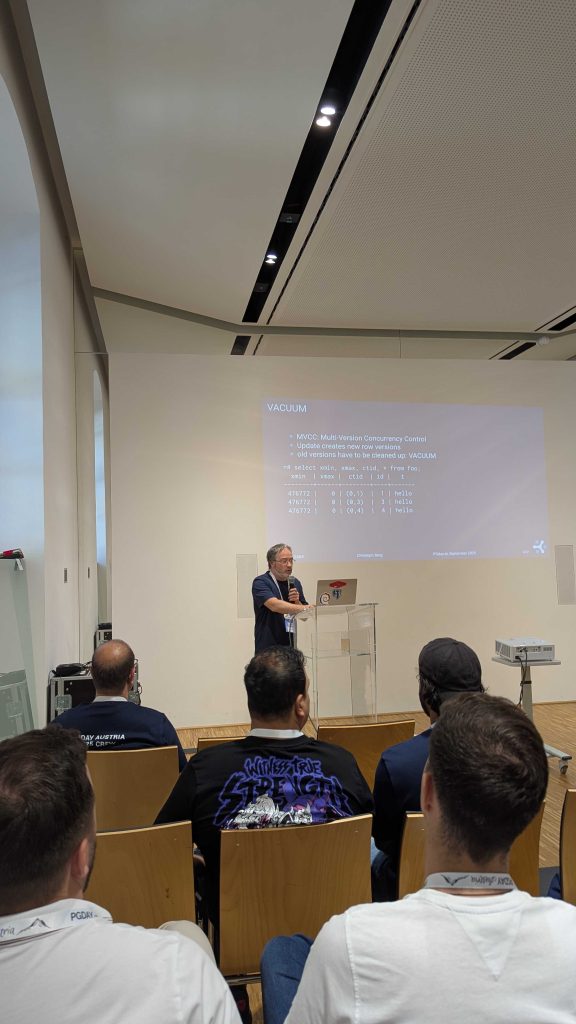

We got off the bus a kilometer away from Sentosa Island and walked the rest of the way. We went on the Sentosa Boardwalk, which is itself a tourist attraction. I was using Organic Maps to navigate to the hotel Resorts World Sentosa, but Organic Maps’ route led us through an amusement park. I tried asking the locals (people working in shops) for directions, but it was a Chinese-speaking region, and they didn’t understand English. Fortunately, we managed to find a local who helped us with the directions.

A shot of Sentosa Boardwalk.

Following the directions, we somehow ended up having to walk on a road which did not have pedestrian paths. Singapore is a country with strict laws, so we did not want to walk on that road. Avoiding that road led us to the Michael Hotel. There was a person standing at the entrance, and I asked him for directions to Resorts World Sentosa. The person told me that the bus (which was standing at the entrance) would drop me there! The bus was a free service for getting to Resorts World Sentosa. Here I parted ways with Badri, who went to his aunt’s place.

I got to the Resorts Sentosa and showed my ticket to get in. There were two zones inside - the first was a room with a glass wall separating the audience and the players. This was the room to watch the game physically, and resembled a zoo or an aquarium. :) The room was also a silent room, which means talking or making noise was prohibited. Audiences were only allowed to have mobile phones for the first 30 minutes of the game - since I arrived late, I could not bring my phone inside that room.

The other zone was outside this room. It had a big TV on which the game was being broadcast along with commentary by David Howell and Jovanka Houska - the official FIDE commentators for the event. If you don’t already know, FIDE is the authoritative international chess body.

I spent most of the time outside that silent room, giving me an opportunity to socialize. A lot of people were from Singapore. I saw there were many Indians there as well. Moreover, I had a good time with Vasudevan, a journalist from Tamil Nadu who was covering the match. He also asked questions to Gukesh during the post-match conference. His questions were in Tamil to lift Gukesh’s spirits, as Gukesh is a Tamil speaker.

Tea and coffee were free for the audience. I also bought a T-shirt from their stall as a souvenir.

After the game, I took a shuttle bus from Resorts World Sentosa to a metro station, then travelled to Pasir Ris by metro, where Badri was staying with his aunt. I thought of getting something to eat, but could not find any cafés or restaurants while I was walking from the Pasir Ris metro station to my destination, and was positively starving when I got there.

Badri’s aunt’s place was an apartment in a gated community. On the gate was a security guard who asked me the address of the apartment. Upon entering, there were many buildings. To enter the building, you need to dial the number of the apartment you want to go to and speak to them. I had seen that in the TV show Seinfeld, where Jerry’s friends used to dial Jerry to get into his building.

I was afraid they might not have anything to eat because I told them I was planning to get something on the way. This was fortunately not the case, and I was relieved to not have to sleep with an empty stomach.

He said that even if you forget your laptop in a public space, you can go back the next day to find it right there in the same spot. He said if you forget your laptop somewhere, you will find it at the same spot the next day. I also learned that owning cars was discouraged in Singapore - the government imposes a high registration fee on them, while also making public transport easy to use and affordable. I also found out that 7-Eleven is not that popular among residents in Singapore, unlike in Malaysia or Thailand.

The next day was our third and final day in Singapore. We had a bus in the evening to Johor Bahru in Malaysia. We got up early, had breakfast, and checked out from Badri’s aunt’s home. A store by the name of Cat Socrates was our first stop for the day, as Badri wanted to buy some stationery. The plan was to take the metro, followed by the bus. So we got to Pasir Ris metro station. Next to the metro station was a mall. In the mall, Badri found an ATM where our cards worked, and we got some Singapore dollars.

It was noon when we reached the stationery shop mentioned above. We had to walk a kilometer from the place where the bus dropped us. It was a hot, sunny day in Singapore, so walking was not comfortable. We had to go through residential areas in Singapore. We saw some non-touristy parts of Singapore.

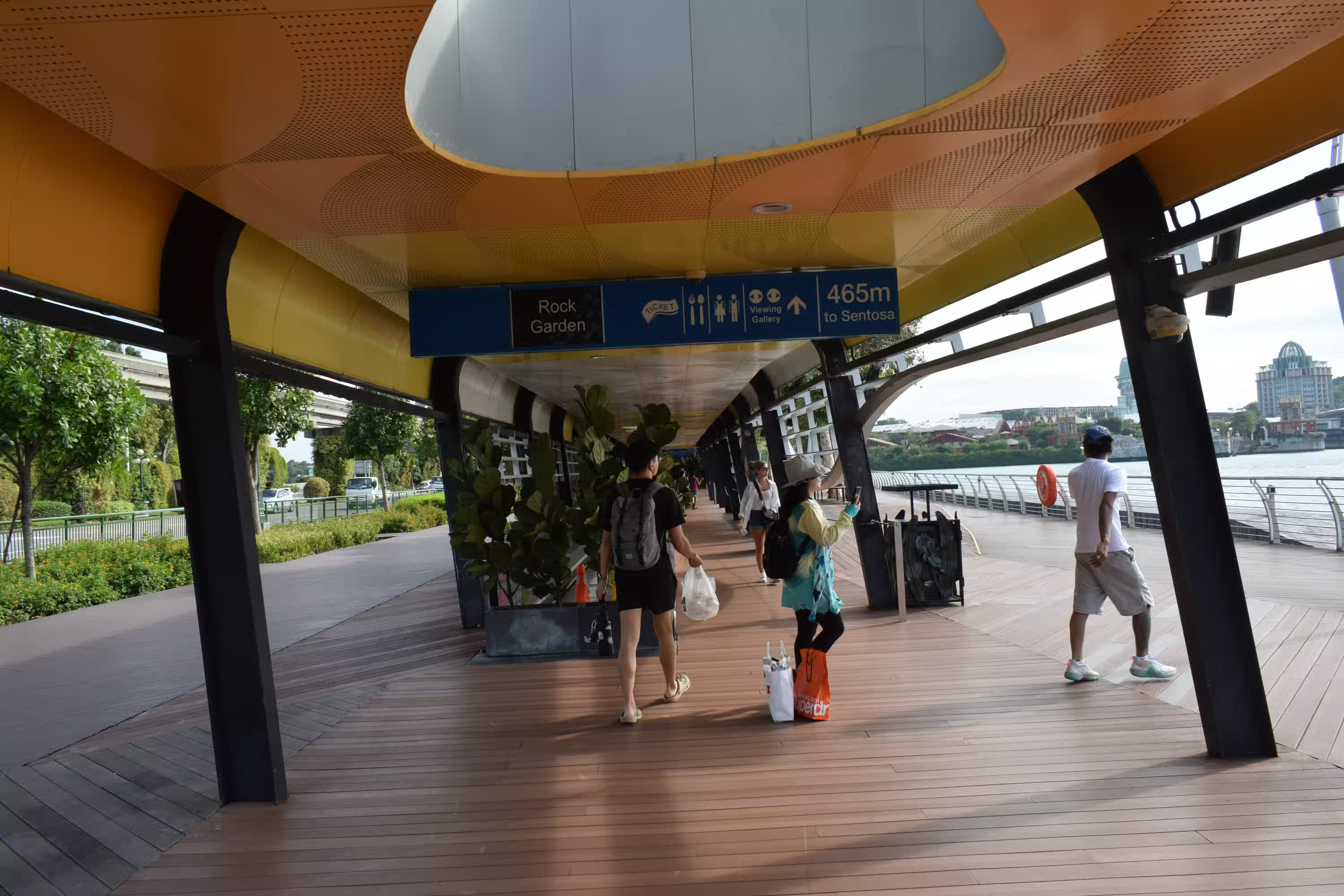

After we were done with the stationery shop, we went to a hawker center to get lunch. Hawker centers are unique to Singapore. They have a lot of shops that sell local food at cheap prices. It is similar to a food court. However, unlike the food courts in malls, hawker centers are open-air and can get quite hot.

This is the hawker center we went to.

To have something, you just need to buy it from one of the shops and find a table. After you are done, you need to put your tray in the tray-collecting spots. I had a kaya toast with chai, since there weren’t many vegetarian options. I also bought a persimmon from a nearby fruit vendor. On the other hand, Badri sampled some local non-vegetarian dishes.

Table littering at the hawker center was prohibited by law.

Next, we took a metro to Raffles Place, as we wanted to visit Merlion, the icon of Singapore. It is a statue having the head of a lion and the body of a fish. While getting through the AFC gates, my card was declined. Therefore, I had to buy an EZ-Link card, which I had been avoiding because the card itself costs 5 Singapore dollars.

From the Raffles Place metro station, we walked to Merlion. The place also gave a nice view of Marina Bay Sands. It was filled with tourists clicking pictures, and we also did the same.

Merlion from behind, giving a good view of Marina Bay Sands.

After this, we went to the bus stop to catch our bus to the border city of Johor Bahru, Malaysia. The bus was more than an hour late, and we worried that we had missed the bus. I asked an Indian woman at the stop who also planned to take the same bus, and she told us that the bus was late. Finally, our bus arrived, and we set off for Johor Bahru.

Before I finish, let me give you an idea of my expenditure. Singapore is an expensive country, and I realized that expenses could go up pretty quickly. Overall, my stay in Singapore for 3 days and 2 nights was approx. 5500 rupees. That too, when we stayed one night at Badri’s aunt’s place (so we didn’t have to pay for accomodation for one of the nights) and didn’t have to pay for a couple of meals. This amount doesn’t include the ticket for the chess game, but includes the costs of getting there. If you are in Singapore, it is likely you will pay a visit to Sentosa Island anyway.

Stay tuned for our experiences in Malaysia!

Credits: Thanks to Dione, Sahil, Badri and Contrapunctus for reviewing the draft.